The great red wine compound “resveratrol,” at it again. Disclaimer: 150 mg of resveratrol per day is too low and 30 days is too short to detect anything close to what was seen in the infamous resveratrol mouse study (Baur et al., 2006 Nature), which showed resveratrol to be the best drug ever on the planet.

This study, on the other hand, utilized the highest quality study design and was published in a great journal, but was a flop. And the media got it wrong too: “Resveratrol holds key to reducing obesity and associated risks.” No, it doesn’t.

Calorie restriction-like effects of 30 days of resveratrol supplementation on energy metabolism and metabolic profile in obese humans (Timmers et al., 2011 Cell Metabolism)

The study design was pristine. Kudos.

Sample size too small (n=11) and study duration too short (30 days), but it was a randomized, double-blind, placebo-controlled, crossover study. And although this type of drug study does not require such a thorough assessment of compliance (a pill count would’ve sufficed), the authors tested blood levels of resveratrol and its metabolites… cool.

On the docket: resVida , DSM Nutritional Products, Ltd.

, DSM Nutritional Products, Ltd.

Divide and conquer

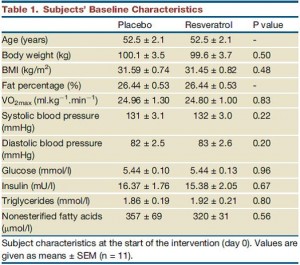

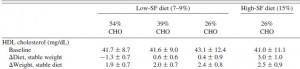

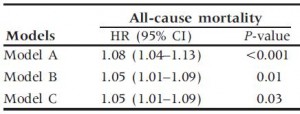

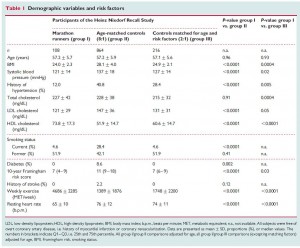

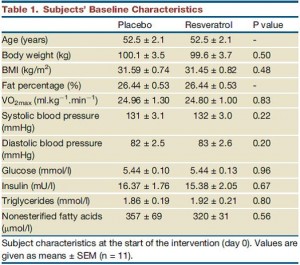

The table above shows baseline characteristics in placebo and treatment groups, but this is peculiar because although the study was randomized (which is confirmed by the high degree of similarity between the two groups), it was also a crossover.

Brief review of my Prelude to a Crossover series (I & II):

phase 1) half the subjects get drug and half gets placebo

phase 2) both groups get nothing for a washout period

phase 3) everybody switches and gets the other treatment

There are technically two baseline periods (before phase 1 and before phase 2), and all the subjects are in both. As such, there is only one set of baseline values, so I’m not sure what the data in the above chart actually reflect. Is this a mistake? or are these data only representative of one of the treatment sessions (which would be an egregious insult to the prestigious crossover design).

In any case, the subjects were all clinically obese, ~100 kg (220 pounds), BMI > 30, body fat > 25%, but otherwise metabolically healthy (fasting glucose levels of < 100 mg/dL).

But here’s where it starts going from technically flawed to weird:

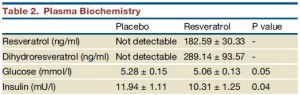

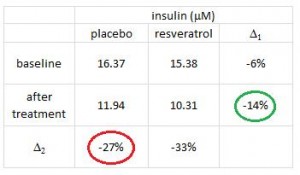

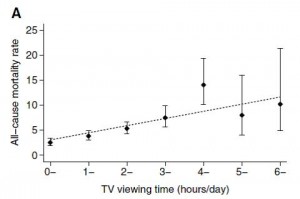

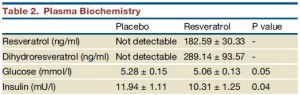

Insulin levels may have been statistically significantly lower after resveratrol compared to placebo, but not after considering baseline insulin was ~15-16 mU in both groups.

Insulin levels may have been statistically significantly lower after resveratrol compared to placebo, but not after considering baseline insulin was ~15-16 mU in both groups.

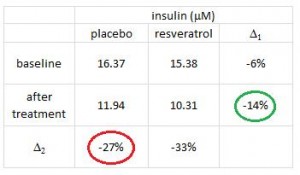

insulin proper

The authors noted that after treatment, insulin levels were 14% lower in resveratrol compared to placebo (green circle). BUT whatever was in that placebo pill was almost twice as good! The placebo reduced insulin levels by 27% (red circle)! (take THAT!) I’m glad the authors reported these data instead of burying them, but they illustrate yet another flaw.

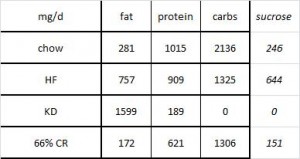

150 mg resveratrol (10-15 bottles of red wine) for a 220 pound person = 1.5 mg/kg; 200x less than what Baur gave his mice (300 mg/kg). Interestingly, however, this produced plasma levels of resveratrol almost 3x higher (180 vs. 65 ng/mL). I have no idea how this happened, but the benefits and lack of toxicity [at such a low dose] bode well for recreational resveratrol supplementation.

As mentioned above, resveratrol was totally safe, but how to interpret this is unclear. Our options are: 1) good; 2) meaningless; or 3) simply not bad (which I suppose is kind-of-like #2). It could be interpreted as meaningless because resveratrol, the anti-aging drug, is meant to be taken for a very VERY long time (i.e., forever). This study proved that resveratrol was safe when taken for 30 days which is considerably shorter than forever.

Furthermore, the dose was phenomenally low, ~150 mg/d, so anything other than “totally safe” would be a huge red flag.

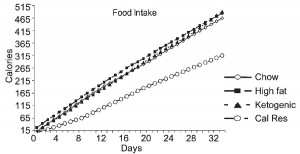

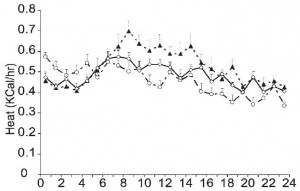

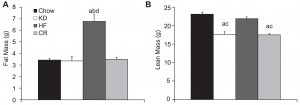

Does resveratrol in fact mimic calorie restriction, as stated in the title? During calorie restriction, food intake declines (by definition), metabolic rate and insulin levels also decline, but free fatty acids and fat oxidation increases. In the resveratrol group metabolic rate and insulin declined (recall however that the placebo was pretty impressive also in this regard), but free fatty acids and fat oxidation decreased. Although proper calorie restriction trials in humans haven’t happened yet, some of these effects don’t jive. A decline in metabolic rate will reduce the amount of fat burned. But relative fat oxidation also declined, leading to what could be a profound reduction in fat burning… coupled with no change in food intake (noted by the authors) this will result in increased fat mass. Energy Balance 101- no ifs, ands, or buts. This study was far too short-term to detect a meaningful increase in fat mass, but if these preliminary findings are true (and my interpretation of the data are correct), then this drug might just make you fat.

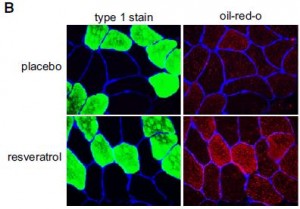

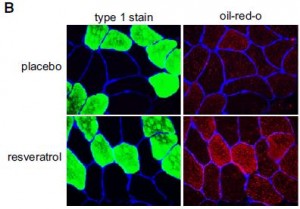

Oddly enough, they did detect an increase in fat accumulation in skeletal muscle:

(perhaps instead of calling it a calorie restriction-mimetic, the authors should’ve gone with exercise-mimetic, citing the athlete’s paradox (e.g., van Loon and Goodpaster, 2006)

In contrast to the popular antidiabetic drug rosiglitazone, which shifts fat storage from liver (where it causes a host of health maladies), to adipose, where it can be stored safely indefinitely, resveratrol shifted fat storage from liver to skeletal muscle. This is interesting because while the fat storage capacity of adipose is seemingly unlimited, I doubt the same is true for skeletal muscle, which needs to do a lot of stuff, like flex.

If these findings are true, which I seriously question, then it would be interesting to see what happens to skeletal muscle fat stores after a few months, considering they doubled in only 30 days (this is unbelievable, literally).

The authors try to make the case that the increased muscle fat came from adipose, but until they report body composition data, this is a tough sell. The elevated fasting free fatty acids support their claim, but the accompaniment of unchanged meal-induced FFA suppression with lower adipose glycerol release don’t; perhaps the missing glycerol is being re-esterified to nascent adipose-derived free fatty acids? Increased adipose tissue glucose uptake would be supported by the lower glucose levels, but that is already more-than-accounted for by the increased RQ (indicative of increased skeletal muscle glucose oxidation).

There are some mysteries in these findings, and the improper handling of crossover data do not help. If this paper is true and my interpretation of the energy balance data are correct, resveratrol might just make you fat :/

Unless of course you’re a mouse, in which case it’ll make you better in every quantifiable measure.

calories proper

p.s. I don’t think resveratrol will really make you fat, I think this study elucidates nothing.

or sucralose

. Anyone remember “water?” Even if you believe “a calorie is a calorie,” exclusively, it’s still really hard to burn off 39 grams of sugar. Try running 2 miles. Skinny kids might do this automatically after drinking a can of soda or eating a doughnut. Not most adults.